Aurich Lawson | Getty Photos

“Artificial Intelligence” as we know it today is, at greatest, a misnomer. AI is in no way smart, but it is synthetic. It remains just one of the most popular matters in sector and is taking pleasure in a renewed desire in academia. This just isn’t new—the earth has been through a series of AI peaks and valleys over the previous 50 years. But what makes the present-day flurry of AI successes diverse is that modern day computing components is ultimately highly effective more than enough to totally implement some wild tips that have been hanging all-around for a long time.

Again in the 1950s, in the earliest days of what we now simply call artificial intelligence, there was a debate around what to identify the subject. Herbert Simon, co-developer of equally the logic idea device and the Basic Difficulty Solver, argued that the field ought to have the a great deal additional anodyne title of “complex details processing.” This surely doesn’t inspire the awe that “artificial intelligence” does, nor does it express the concept that devices can believe like humans.

Nevertheless, “intricate info processing” is a substantially greater description of what artificial intelligence truly is: parsing complex knowledge sets and trying to make inferences from the pile. Some modern-day illustrations of AI involve speech recognition (in the sort of virtual assistants like Siri or Alexa) and techniques that establish what’s in a photograph or suggest what to purchase or observe following. None of these illustrations are equivalent to human intelligence, but they show we can do impressive factors with plenty of information processing.

Irrespective of whether we refer to this industry as “complicated facts processing” or “synthetic intelligence” (or the additional ominously Skynet-sounding “device mastering”) is irrelevant. Huge amounts of work and human ingenuity have absent into developing some certainly incredible applications. As an case in point, glance at GPT-3, a deep-learning design for organic languages that can create textual content that is indistinguishable from textual content penned by a particular person (nevertheless can also go hilariously erroneous). It is backed by a neural community product that utilizes more than 170 billion parameters to model human language.

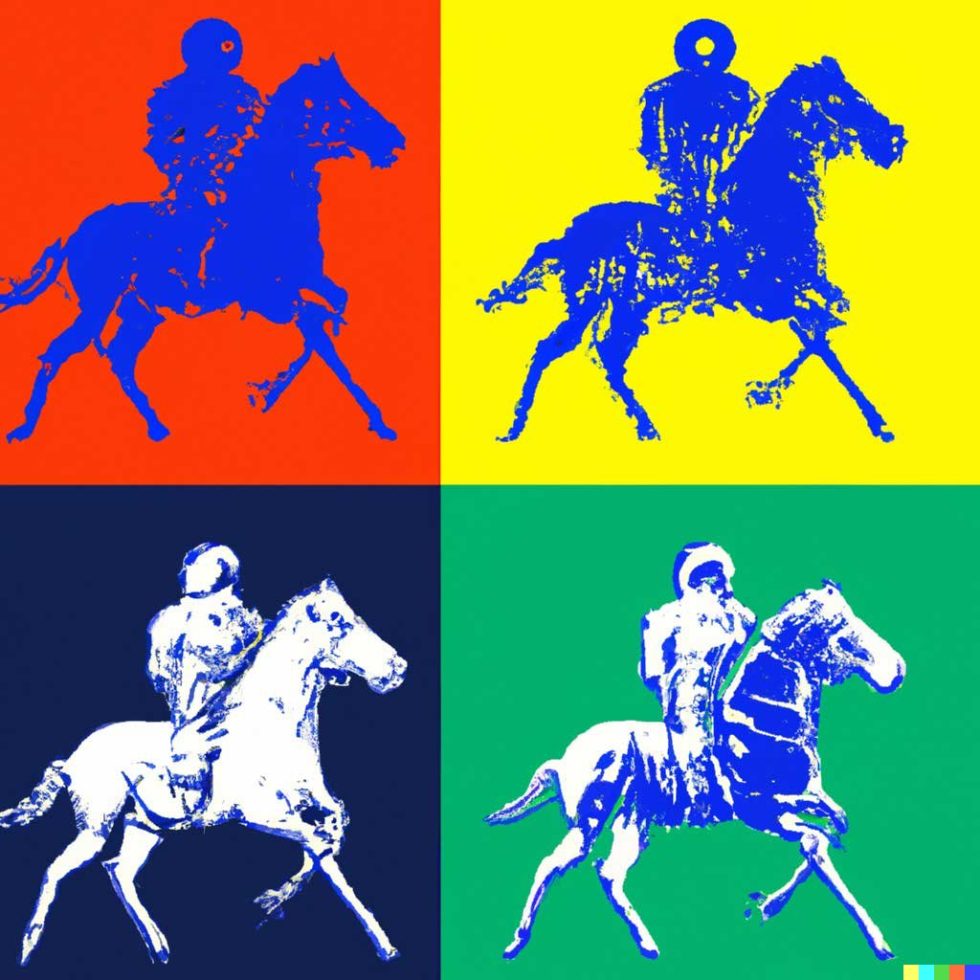

Built on best of GPT-3 is the resource named Dall-E, which will deliver an impression of any fantastical issue a person requests. The up-to-date 2022 version of the resource, Dall-E 2, allows you go even even more, as it can “understand” variations and ideas that are pretty summary. For instance, inquiring Dall-E to visualize “an astronaut using a horse in the type of Andy Warhol” will produce a amount of visuals such as this:

Dall-E 2 does not carry out a Google look for to come across a very similar impression it results in a photo based mostly on its inner model. This is a new image crafted from practically nothing but math.

Not all programs of AI are as groundbreaking as these. AI and device finding out are finding utilizes in practically every single marketplace. Device learning is immediately getting to be a will have to-have in many industries, powering every little thing from recommendation engines in the retail sector to pipeline basic safety in the oil and gas industry and prognosis and patient privacy in the overall health care field. Not every company has the assets to develop instruments like Dall-E from scratch, so there’s a ton of demand for very affordable, attainable toolsets. The problem of filling that demand has parallels to the early days of business computing, when computers and personal computer applications were quickly turning out to be the technology companies essential. While not all people demands to produce the up coming programming language or functioning process, numerous providers want to leverage the ability of these new fields of research, and they require similar equipment to assistance them.